CarabID and TephritID: Using ImageBased Machine Learning Technologies to Identify Ground Beetles and Fruit Flies in Aotearoa

Machine Learning and Taxonomy | Angie Zhu and Hope Ryu

An unfortunate decline in taxonomic expertise, resources, and time has become fuel for the fire of machine learning development for image-based insect classification. Species of Aotearoa are known for their unique endemicity, meaning this has particular applications in conservation and biosecurity. In this study, we used data of fruit flies and beetles from the New Zealand Arthropod Collection (NZAC) to test web applications, aptly called TephritID and CarabID, using deep learning. Are machine learning tools ready to supplement and eventually replace human expertise?

Introduction

Earth houses around 8.7 million distinct species [1], and terrestrial invertebrates, including insects, form a large part of this [2]. Although insects are understudied compared to vertebrates, they are crucial to ecosystem functioning through processes such as pollination, biosecurity management, and understanding various species interactions [3]. This is particularly relevant in Aotearoa, whose unique geography has both conserved its historical biodiversity and endemism [2]. However, this simultaneously means that introductions of new species pose a particular threat to the displacement-susceptible indigenous biota [4-5]. When these factors are combined, it is easy to see how being able to accurately and efficiently classify taxa can prevent rapid colonisation and ecological perturbations of invasive species, as well as be crucial for the monitoring of threatened species. The fly family Tephritidae, commonly known as fruit flies, and the native ground beetle family Carabidae serve as excellent examples of the two scenarios, respectively.

In regions of Africa, Bactrocera dorsalis has been observed superseding the indigenous Ceratitis cosyra on mango [6]. The aggressive, colonising nature of introduced fruit flies also threatens Aotearoa’s agroecosystems, where invasive species establish themselves in unsustainable numbers [5]. In Australia, approximately 4.8 billion dollars worth of produce is lost per year due to fruit fly damage [7]. On the other hand, there are at least 600 ground beetle species in New Zealand (Coleoptera: Carabidae), with most being endemic [8]. The New Zealand native ground beetles are also one of the most threatened invertebrate taxa and are one of the top priorities for conservation [3].

Despite Aotearoa’s prioritisation of biosecurity and conservation efforts, current methods used to identify and classify insect populations are time consuming and require a significant amount of work by taxonomists and entomologists [9]. However, machine learning has been recruited and developed over the last few decades to aid in image and video identification for various purposes. Development started slow and simple, but it was quickly noticed that although classification of objects in images is an intrinsic human skill, it comes much less naturally to machines [10]. Convolutional neural networks (CNNs) provided a scalable solution to this problem. CNNs are biologically inspired deep learning architectures, designed primarily to recognise and differentiate the physical appearance of specimens captured, including specific patterns and shapes [11]. They have already been used widely in many studies to help identify and classify insect groups from images [9], [12], [13], showing great potential for related applications. Similar to the study conducted by Hansen et al. [9], two web applications called “CarabID” and “TephritID” have recently been developed by Dr Aaron Harmer at Manaaki Whenua Landcare Research. Both are fed by a CNN model using open source YOLO (You Only Live Once) object identification. Training of the model was conducted through an annotation and model training program called Roboflow to ensure correct labelling, annotation, and identification of the target shape or key features of the ground beetle and fruit fly images tested.

This report aims to test the level of accuracy in the identification of taxa from specimen images of the families Carabidae and Tephritidae using the web applications CarabID and TephritID. This will help developers of applications understand the current performance and level of accuracy from the confidence scores given by the model. The result would allow them to make modifications to this model if it is required. In the future, taxonomists and entomologists may use this model to identify and monitor the distribution and activity of native and endemic beetles and flag pest fruit fly occurrences more efficiently without the need for manual labour and extensive expertise.

Method

Ground beetles (Carabidae)

Ground beetle specimens (Carabidae) were taken from the John Nunn Collection in the New Zealand Arthropod Collection (NZAC). A total of 464 images containing photos of various insects were used and divided into four groups: the baseline (original images of ground beetle specimens used to train the model, n = 36); new ground beetle species (n = 341), other Coleoptera species (n = 38), and other insects (n = 49).

The dorsal view of 150 pinned New Zealand ground beetle species were imaged with VDPassport and EOS Utility software using the Canon EOS RP camera with a Canon 50mm macro lens. These images were included in the “other Coleoptera” group for AI testing. We manipulated the images that were tested when appropriate, including background removal, addition of white background, scale bar removal, and image rotation to ensure that the insect’s head was facing to the right. The resulting images were put into the CarabID model for testing, setting the lowest confidence level to 25%. Pre-processing corrections including converting images to grayscale, standardising colour contrast values, blurring the images to reduce grain noise, and reducing the aspect ratio were applied to each image that was tested to ensure that the convolutional neural network focused on the diagnostic features of the specimens in the image, similar to the study by Ward and Martin [14] (Figure 1).

All images were compared to the baseline model, and we ensured that the whole body (thorax plus abdomen) of the specimens were outlined (Figure 1). Accuracy to the genus level was determined usings the scores given by CarabID and recorded for each insect specimen within each group. In addition, we combined multiple Coleoptera genera into tribes and determined whether there was a higher score indicating the number of correct predictions compared to these at the genus level.

Figure 2: Unprocessed image of a Sphenella fascigera wing. Figure 2b (bottom): same wing with pre-processing corrections applied.

A range of ground beetle taxa were used to test the identification model, as well as images of insects from other orders.

Fruit flies (Tephritidae)

All specimens are from the New Zealand Arthropod Collection (NZAC) held at Manaaki Whenua Landcare Research. A suite of tephritid taxa were used to test the model, and various taxa from the orders Hymenoptera and Lepidoptera too. To obtain isolated and accurate images of the specimen wings, they were gently removed with needle point dissecting forceps from the tegula. Once separated, the wings were held flat with a microscope slide over top to ensure its shape was not morphed in three dimensions. Some variation was observed due to specimen condition, setting position, and removal technique. The alula was not always possible to present extended and flattened. Significantly deteriorated and particulate-covered wings were not photographed.

Images of flattened wings were taken on a Leica EZ4W stereo microscope using the built-in camera and external Leica Application Suite (LAS) program.

Pre-processing corrections are applied in-app to standardise images and allow the CNN model to concentrate on diagnostic characteristics. These include a greyscale colour conversion, noise reduction through blurring, standardisation of brightness and contrast, and a square aspect ratio conversion (Figure 2b).

A total of 371 wing images were run through TephritID with the minimum confidence prediction set to the lowest threshold (25%). Input images (see example in Figure 2a) were uploaded to the program. Each pre-processed output image resembled Figure 2b, with the aforementioned corrections applied and a bounding outline containing the annotated taxa prediction. Additionally, the web application presented confidence scores between 0 (‘could not make a prediction with sufficient confidence’) and 1.0 (highest confidence prediction).

Taxa predictions with their corresponding confidence scores from both models were recorded on an Excel spreadsheet for each category. Some fruit fly wing images were altered to test if simple changes to orientation and aspect ratio would have an effect. For some modified ground beetle images in the “new species” and “other Coleoptera” groups, the unmodified version of images taken from the same specimen was further tested and determined the level of prediction accuracy. Original unmodified scores were compared to the modified scores that are used in our data given by CarabID to determine whether there was a difference between the two groups. Image manipulation details and alternate scores were recorded where applicable.

Results

Ground beetles (Carabidae)

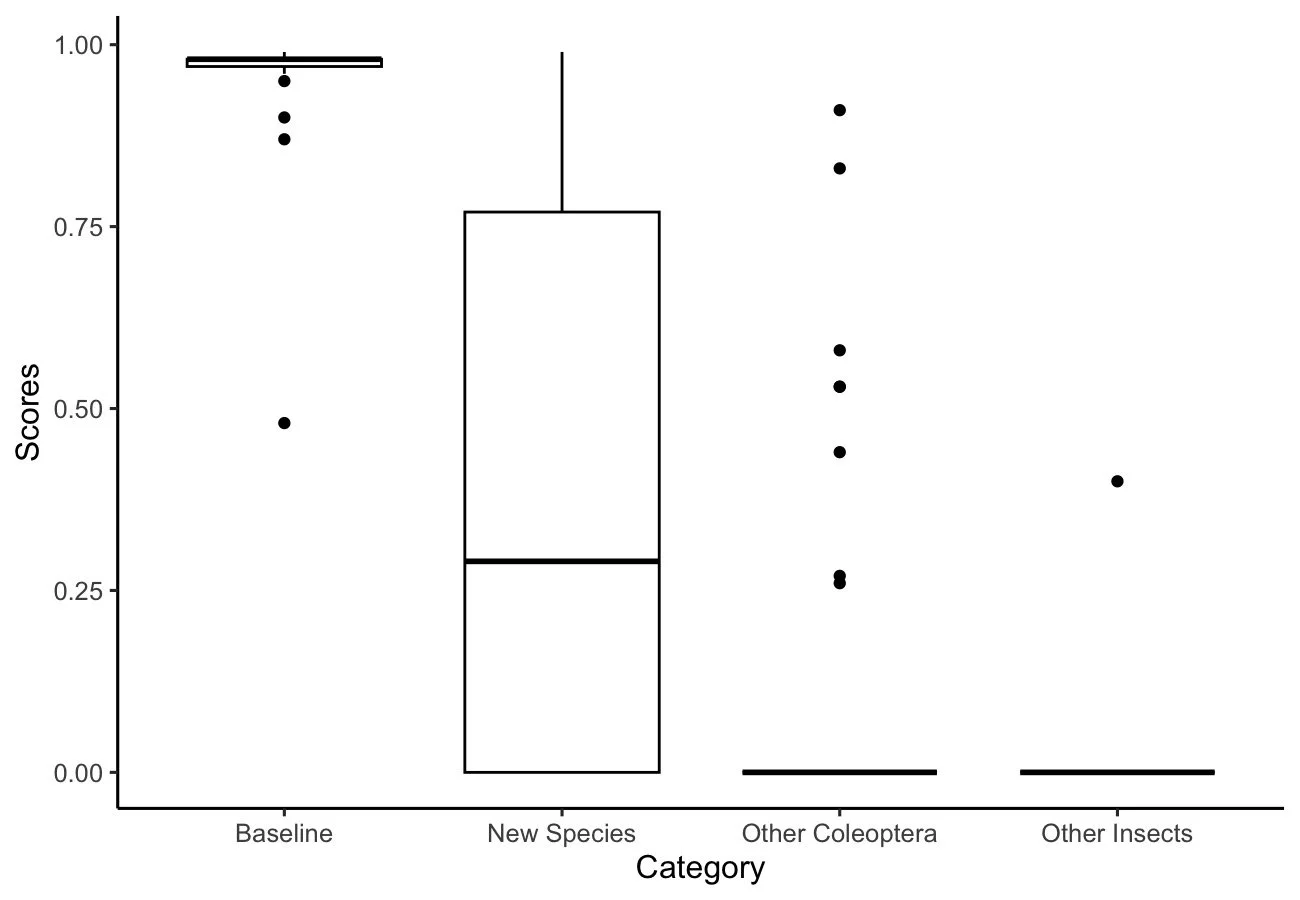

From testing various images of insect species, our result indicated that images within the baseline group had the highest confidence score across all groups, and other insects showed the lowest, with almost all scores being zero. For the new species group, there seems to be a wider variability in the distribution of confidence scores, although the average value was higher than other Coleoptera and other insects.(Figure 3).

Figure 3: Distribution of scores for images within each of the insect groups. The baseline showed the highest overall score, while other insects showed the lowest

The distribution of correctly identified images of Carabidae at both the genus and tribe level showed similar patterns. Most of the baseline, other Coleoptera, and other insect specimens showed a high level of detection accuracy by CarabID. However, due to the high variability in the distribution of confidence scores within the new species group, the level of accuracy appeared to be much lower than the other groups (Figure 4). The distribution of correctly identified images of Carabidae at both the genus and tribe level showed similar patterns. Most of the baseline, other Coleoptera, and other insect specimens showed a high level of detection accuracy by CarabID. However, due to the high variability in the distribution of confidence scores within the new species group, the level of accuracy appeared to be much lower than the other groups (Figure 4).

When we compared the differences in the confidence score accuracy between the genus and tribe given by CarabID, our result indicated that grouping Carabidae species into tribes gave a higher relative proportion of images that were correctly identified than those at the genus level for both the baseline and new species group. There was no difference in the level of detection accuracy for other Coleoptera and other insect groups as the tribes for these insects did not belong to those that can be currently identified by CarabID.

Additionally, most of the detections for baseline, other Coleoptera, and other insects showed a relatively high detection accuracy at both the genus and the tribe level, whereas the level of detection accuracy for the new species group was lower, with a higher proportion of images being incorrectly identified (Figure 5).

Interestingly, when images were manipulated, the CarabID application provided a different score from the unmanipulated images for most of the images that were tested. Higher scores were generally more common if the image was edited (Figure 6). None of the 229 unmodified images from the new species (n = 191) and other Coleoptera (n = 38) categories that were tested were accurately identified to the genus level, and only three images from the new species group were accurately identified to the tribe level, although there was no correct identification to the genus level for this group (Figure 6).

Check out pages 6, 7, & 8 of Version 5 Edition 1 for relevant graphs.

A total of 371 wings were used from 5 groups of images: the baseline, which contained images used to train the model (n=41); same species containing images of the same taxa but different specimens (n=33); new species with different species within the same genera (n=43); other flies, which contained Diptera of completely different genera and families (n=192); and other insects with various moth and wasp taxa (n=58). The highest confidence score sat at 1.0 for Procecidochares utilis within the baseline group (Figures 7, 8, 9). Large numbers of outliers were seen for baseline, new species, and other flies in particular. All data appears to be negatively skewed, with median values all above 0.78 (species confidence for other insects). Greater variation was seen overall in the species confidence scores as opposed to genus confidence (Figure 7). No predicted scores above 0 were correct for the categories ‘new species’, ‘other flies’, and ‘other insects’. 19 out of 27 correct scores were greater than 0.9 for the baseline and 12 out of 26 were for the same species group. Overall, there was a much greater number of incorrect predictions (mostly false positives) than correct ones (true positives or negatives). The genus scores have less variation in general. For the baseline, 27 of 32 correct scores were greater than 0.9, while 15 of 21 were for same species and all 20 were for new species. Mean confidence scores are 0.85, 0.80, 0.79, 0.76, and 0.74 for species, and 0.93, 0.78, 0.87, 0.88, and 0.75, for the genus level.

Discussion

The experiments demonstrated the potential of TephritID and CarabID to predict its namesake taxa from images of their wings and body. However, the results imply the model isn’t as robust as is needed at its current stage of development. Several sources of limitation have been identified, providing a scaffold for future steps.

Accuracy at different taxonomic ranks

The TephritID model was able to output reasonable mean confidence values at species-level identification, these being 0.85 (baseline), 0.80 (same species), 0.79 (new species), 0.76 (other flies), and 0.74 (other insects). At the broader genus level, the accuracy was unsurprisingly higher with scores of 0.93, 0.78, 0.87, 0.88, and 0.75, respective to the aforementioned list, and less variable overall towards higher values. A similar trend was shown after testing the CarabID model, where accuracy was higher and less variable when images were grouped into a higher taxonomic range at the tribe level consisting of multiple genera. These findings are indicative of promising progress and a decent current model. At the species level (Figure 8), it is evident that incorrect predictions given by TephritID considerably outnumber correct ones (315:53). This is also true for CarabID at the genus level, where there was a more confined range of insects. These skewed inaccuracies are largely influenced by the ‘New species’, ‘Other flies/Other Coleoptera’, and ‘Other insects’ groups. Additionally, there was a decrease in the score from the ‘baseline’ to ‘other insects’ for both models, with baseline images used in model training being the highest and ‘other insect’s being the lowest.

However, the models returned a range of scores with highly varied accuracy and predictions for specimens in the ‘new’ and ‘other’ groups when it should have been outputting low to no scores for taxa it did not recognise. This is because TephritID and CarabID were only trained to identify certain fruit fly and ground beetle taxa to their species and genus levels, and therefore were more likely to provide a score of zero for any images that belonged to the ‘Other flies/other Coleoptera’ and ‘Other insects’ groups. It is very likely that images from ‘Other insects’ had obviously different features compared to the ‘New species’ and ‘Other flies/other Coleoptera’ groups, which would increase the proportion of insect specimens that were correctly identified, with high accuracy scores expected. This could also explain why there was a much lower proportion of images incorrectly identified from the ‘New species’ group, as the specimens tested belonged to the same order (Diptera and Coleoptera) as those on which the model was trained, possibly increasing the difficulty for accurate detection due to the insect having similar features.

Potential issues with TephritID and CarabID

Since TephritID and CarabID tend to identify taxa with relatively high confidence when testing baseline images, but not those within the ‘New species’ category, this suggests that there may be an issue of overconfident misclassification and a decline in the models’ ability to generalise as images accumulate variations that were absent in the training data. This is a common challenge when working with neural networks such as the CNN, and a key obstacle in further development of deep learning techniques [15]. Generalisation failure is universally cited as a continual challenge in machine learning, and is often a repercussion of limited data availability in the sample set [16]. According to Zohuri & Moghaddam [17], deep learning models using CNN are reliant on the performance of AI and require a large dataset including at least 10,000 images as reference examples when training the model. The dataset used to train TephritID only contained approximately 800 images, whereas CarabID contained 1,764 original beetle images, with a total of 4,143 images after augmentation was applied. This could indicate that a larger dataset may be required to increase model accuracy and to reduce inconsistency in the identification of ground beetles to their correct taxa.

Another limitation of low data availability for model training is an effect called overfitting [18], which is when the weights adopted by the model for certain features are optimised for the training data, but result in poor performance when faced with new data [19]. This occurs when the model lacks the ability to make generalisations on new data that it was not previously exposed to, resulting in the model performing poorly on testing datasets although it shows good prediction on the training datasets [20]. Overfitting especially affects smaller sample sizes used in model training as the level of noises tend to be higher, and it is believed that increasing image augmentations can help reduce model overfitting [20], [21]. This variability points toward potential errors somewhere along the line between model training and testing, or a model that is not yet rigorous enough to provide consistently reliable predictions. Images used for testing the CarabID model were taken at slightly different angles under different lighting conditions, with some images appearing to have a lower resolution than others. Theoretically, if the CNN model was performing as expected, image quality and position should not affect the results after the preprocessing process with standardisation [14]. However, large differences in the scores obtained from CarabID between modified images and pre-modified images in the ‘New species’ and ‘Other Coleoptera’ categories was evident, which further indicates possible model overfitting.

A couple straightforward options exist to reduce bias, spurious correlations, and misleading predictions within the model. As mentioned above, the likely culprits of the large amounts of variation and overconfident misclassifications are overfitting, an inability to generalise, and a shortage of training set data. Highly accurate classifications require sizable datasets [22], so a next step for TephritID and CarabID would be to undergo more digitisation of NZAC specimens, as well as of other taxa from all over the world for continued training. Secondly, it is interesting to note that modifications such as tilting and mirroring of existing images tended to output different predictions than pre-modified images. Data augmentations should have accounted for these, but since differences were observed, more iterations may be needed, especially considering the small training dataset. Augmentation techniques increase the number of training samples during model training, and it also tends to reduce overfitting [18]. This is especially true for the CarabID model, as only a very small proportion of specimens were correctly identified to both the genus and tribe level pre-modification, with no accurate detection scores given for images at the genus level, and only three images from the ‘New species’ group accurately identified to the tribe level. However, the above techniques have their own drawbacks, and there is work being done worldwide to compensate for them. All in all, TephritID and CarabID reaffirm the potential of using wing and body images and deep learning methods to identify taxa. Its potential applications in biosecurity surveillance and conservation make it particularly relevant — especially within Aotearoa, where the robustness of these systems can be the difference between ecological balance and devastation.

The development of AI models through deep learning tools for entomological studies in a New Zealand context has great potential that will benefit conservation and biodiversity. Although the web applications CarabID and TephritID may not currently be able to accurately describe and identify the correct Tephritidae and Carabidae genera for the ‘New species’ category that displays similar traits as the images used in model training, modifications of these web tools in the future could be effective in helping with the classification and identification of native and invasive insect species, reducing the amount of time required by entomologists and researchers. Digitisation provides opportunities that will be especially useful for biosecurity surveillance, conservation projects of threatened native and endemic species, and helping to maintain the biodiversity of Aotearoa. In the future, it would be beneficial to make appropriate changes to image-based deep learning models so they can provide greater accuracy in identifying fruit flies and New Zealand native beetles to more confined taxonomic ranges, which provide further opportunities for monitoring live fruit fly and ground beetle species for ecological research and pest eradication.

Figure 1: Example of Carabidae specimen images used to test the CarabID app. Left: original image put into the app, right: image with pre-processing corrections.

Acknowledgments

The biggest and buggiest thank you to our supervisor, Darren Ward, who was generous with guidance when it was needed, but also gave us free reign and made sure we didn’t feel we were constantly being watched. Another massive thank you is in order for Robert Hoare, Grace Hall, and Aaron Harmer for giving us the most amazing tips, and for our summer roomie Simon Connolly who kept us sane while databasing in the lab. Lastly, we want to express gratitude for Anna Santure and Libby Liggins who did such a fantastic job not just coordinating the whole Summer Research Program, but also for caring to get to know us. “No rest for the busy bees” — André Larochelle, Marie-Claude Larivière, 2025

[1] C. Mora, D. P. Tittensor, S. Adl, A. G. B. Simpson, and B. Worm, “How Many Species Are There on Earth and in the Ocean?,” PLoS Biology, vol. 9, no. 8, p. e1001127, Aug. 2011. doi: 10.1371/journal.pbio.1001127.

[2] M. J. Costello, “Exceptional endemicity of Aotearoa New Zealand biota shows how taxa dispersal traits, but not phylogeny, correlate with global species richness,” Journal of the Royal Society of New Zealand, vol. 54, no. 1, pp. 1–16, Apr. 2023, doi: 10.1080/03036758.2023.2198722.

[3] L. Fuller, P. M. Johns, and R. M. Ewers, “Assessment of protected area coverage of threatened ground beetles (Coleoptera: Carabidae): a new analysis for New Zealand,” New Zealand Journal of Ecology, vol. 37, no. 2, pp. 184–192, Jan. 2013. [Online]. Available: https:// newzealandecology.org/nzje/3082.pdf.

[4] R. B. Allen and W. G. Lee, Eds., Biological invasions in New Zealand. Springer Science & Business Media, 2006. doi: 10.1007/3-540-30023- 6.

[5] S. L. Goldson, “Biosecurity, risk and policy: a New Zealand perspective,” Journal für Verbraucherschutz und Lebensmittelsicherheit, vol. 6, no. S1, pp. 41–47, Mar. 2011, doi: 10.1007/s00003-011-0673-8.

[6] S. Ekesi, M. K. Billah, P. W. Nderitu, S. A. Lux, and I. Rwomushana,“Evidence for competitive displacement of Ceratitis cosyra by the invasive fruit fly Bactrocera invadens (Diptera: Tephritidae) on mango and mechanisms contributing to the displacement,” Journal of Economic Entomology, vol. 102, no. 3, pp. 981–991, Jun. 2009, doi: 10.1603/029.102.0317.

[7] S. Sultana, J. B. Baumgartner, B. C. Dominiak, J. E. Royer, and L. J. Beaumont, “Impacts of climate change on high priority fruit fly species in Australia,” PLoS One, vol. 15, no. 2, p. e0213820, Feb. 2020, doi: 10.1371/journal.pone.0213820.

[8] A. Larochelle and M.-C. Larivière, “Carabidae (Insecta: Coleoptera): synopsis of supraspecific taxa”, fnz, vol. 60, Nov. 2007. DOI: 10.7931/J2/FNZ.60

[9] O. L. P. Hansen et al., “Species‐level image classification with convolutional neural network enables insect identification from habitus images,” Ecology and Evolution, vol. 10, no. 2, pp. 737–747, Dec. 2019, doi: 10.1002/ece3.5921.

[10] W. Rawat and Z. Wang, “Deep convolutional neural networks for image classification: A comprehensive review,” Neural Computation, vol. 29, no. 9, pp. 2352–2449, Sep. 2017, doi: 10.1162/neco_a_00990.

[11] T. T. Høye et al., “Deep learning and computer vision will transform entomology,” Proceedings of the National Academy of Sciences, vol. 118, no. 2, Jan. 2021, doi: 10.1073/pnas.2002545117.

[12] D. C. Amarathunga, J. Grundy, H. Parry, and A. Dorin, “Methods of insect image capture and classification: A systematic literature review,” Smart Agricultural Technology, vol. 1, p. 100023, Dec. 2021, doi: 10.1016/j. atech.2021.100023.

[13] S. Coulibaly, B. Kamsu-Foguem, D. Kamissoko, and D. Traore, “Explainable deep convolutional neural networks for insect pest recognition,” Journal of Cleaner Production, vol. 371, p. 133638, Oct. 2022, doi: 10.1016/j.jclepro.2022.133638.

[14] D. Ward and B. Martin, “Trialling a convolution neural network for the identification of Braconidae in New Zealand,” Journal of Hymenoptera Research, vol. 95, pp. 95–101, Feb. 2023, doi: 10.3897/jhr.95.95964.

[15] J. Wang, J. Ai, M. Lu, J. Liu, and Z. Wu, “Predicting neural network confidence using high-level feature distance,” Information and Software Technology, vol. 159, Apr. 2023, doi: 10.1016/j.infsof.2023.107214.

[16] S. C. Haynes, P. Johnston, and E. Elyan, “Generalisation challenges in deep learning models for medical imagery: insights from external validation of COVID-19 classifiers,” Multimedia Tools and Applications, pp. 1-20, Feb. 2024, doi: 10.1007/s11042-024-18543-y.

[17] B. Zohuri, “Deep learning limitations and flaws,” Mod. Approaches on Mater, Sci, vol. 2, no. 3, Jan. 2020, pp. 241-250, doi: 10.32474/ mams.2020.02.000138.

[18] P. Thanapol, K. Lavangnananda, P. Bouvry, F. Pinel, and F. Leprévost, “Reducing overfitting and improving generalization in training convolutional neural network (CNN) under limited sample sizes in image recognition,” In InCIT, pp. 300-305, Oct. 2020. doi: 10.1109/ InCIT50588.2020.9310787.

[19] M. K. Rusia and D. K. Singh, “An efficient CNN approach for facial expression recognition with some measures of overfitting,” International Journal of Information Technology, vol. 13, no. 6, pp. 2419–2430, Sep. 2021, doi: 10.1007/s41870-021-00803-x.

[20] X. Ying, “An overview of overfitting and its solutions,” Journal of Physics: Conference Series, vol. 1168, no. 2, p. 022022, Feb. 2019, doi: 10.1088/1742-6596/1168/2/022022.

[21] T. Islam, Md. S Hafiz, J. R. Jim, Md. M. Kabir, and M.F. Mridha, “A systematic review of deep learning data augmentation in medical imaging: Recent advances and future research directions,” Healthcare Analytics, vol. 5, pp. 100340–100340, Jun. 2024, doi: 10.1016/j. health.2024.100340.

[22] M. Valan, K. Makonyi, A. Maki, D. Vondráček, and F. Ronquist, “Automated taxonomic identification of insects with expert-level accuracy using effective feature transfer from convolutional networks,” Systematic Biology, vol. 68, no. 6, pp. 876–895, Mar. 2019, doi: 10.1093/sysbio/syz014.

Angie is in her final year of a conjoint degree in Biology and Economics. She has recently completed a summer research project at Manaaki Whenua, exploring the potential use of machine learning to identify New Zealand insect taxa. Angie is also keenly interested in behavioural ecology and plant-animal interactions.

Angie Zhu - BCom/ BSc Conjoint, Biological Sciences and Economics

Hope is in her final year of the Zoology pathway of a BSc. Her fascination with the diversity of insects coupled with a soft spot for the misunderstood has drawn her towards a career in entomology and conservation. Hope also recently completed a Summer Research Studentship at Manaaki Whenua.